앙상블의 종류

1. 투표방식

- 여러개의 추정기(Estimator)가 낸 결과들을 투표를 통해 최종 결과를 내는 방식

- 종류

- Bagging - 같은 유형의 알고리즘들을 조합하되 각각 학습하는 데이터를 다르게 한다.

- Voting - 서로 다른 종류의 알고리즘들을 결합한다.

2. 부스팅(Boosting)

- 약한 학습기(Weak Learner)들을 결합해서 보다 정확하고 강력한 학습기(Strong Learner)를 만든다.

Voting

Voting의 유형

- hard voting

- 다수의 추정기가 결정한 예측값들 중 많은 것을 선택하는 방식

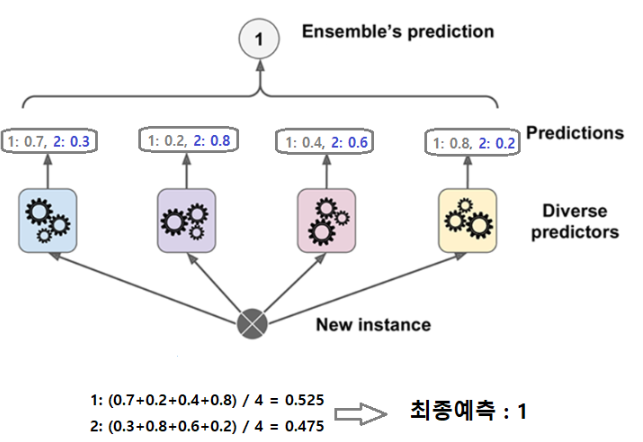

- soft voting

- 다수의 추정기에서 각 레이블별 예측한 확률들의 평균을 내서 높은 레이블값을 결과값으로 선택하는 방식

- 일반적으로 soft voting이 성능이 더 좋다.

- Voting은 성향이 다르면서 비슷한 성능을 가진 모델들을 묶었을때 가장 좋은 성능을 낸다.

VotingClassifier 클래스 이용

- 매개변수

- estimators : 앙상블할 모델들 설정. ("추정기이름", 추정기) 의 튜플을 리스트로 묶어서 전달

- voting: voting 방식. hard(기본값), soft 지정

와인데이터셋

import pandas as pd

import numpy as np

wine = pd.read_csv('data/wine.csv')

# quality 인코딩

from sklearn.preprocessing import LabelEncoder

encoder = LabelEncoder()

wine['quality'] = encoder.fit_transform(wine['quality'])

# X, y 분리

y = wine['color']

X = wine.drop(columns='color')

# train/test set 분리

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, stratify=y)모델링

from sklearn.neighbors import KNeighborsClassifier

from sklearn.tree import DecisionTreeClassifier

from sklearn.svm import SVC

from sklearn.linear_model import LogisticRegression

knn = KNeighborsClassifier(n_neighbors=5)

dt = DecisionTreeClassifier(max_depth=5)

svm = SVC(C=0.1, gamma='auto', probability=True) #SVC 모델을 soft voting에 사용하려면 probability=True 로 설정해야 한다.

lg = LogisticRegression()

estimators = [('knn',knn), ('dt',dt), ('svm',svm), ('lg', lg)]

from sklearn.metrics import accuracy_score

for name, model in estimators:

model.fit(X_train, y_train)

pred_train = model.predict(X_train)

pred_test = model.predict(X_test)

print(name+":", accuracy_score(y_train, pred_train), accuracy_score(y_test, pred_test))

# knn: 0.9599753694581281 0.9421538461538461

# dt: 0.9901477832512315 0.9821538461538462

# svm: 0.8918308702791461 0.8898461538461538

# lg: 0.9747536945812808 0.9704615384615385VotingClassifier를 이용해 앙상블 모델 생성

Hard Voting

from sklearn.ensemble import VotingClassifier

v_clf = VotingClassifier(estimators) #voting="hard"-기본값 || "soft"

v_clf.fit(X_train, y_train) #각각의 모델을 학습

#==> VotingClassifier(estimators=[('knn',

KNeighborsClassifier(algorithm='auto',

leaf_size=30,

metric='minkowski',

metric_params=None,

n_jobs=None, n_neighbors=5,

p=2, weights='uniform')),

('dt',

DecisionTreeClassifier(ccp_alpha=0.0,

class_weight=None,

criterion='gini',

max_depth=5,

max_features=None,

max_leaf_nodes=None,

min_impurity_decrease=0.0,

min_impurity_split=No...

probability=True, random_state=None,

shrinking=True, tol=0.001, verbose=False)),

('lg',

LogisticRegression(C=1.0, class_weight=None,

dual=False, fit_intercept=True,

intercept_scaling=1,

l1_ratio=None, max_iter=100,

multi_class='auto',

n_jobs=None, penalty='l2',

random_state=None,

solver='lbfgs', tol=0.0001,

verbose=0,

warm_start=False))],

flatten_transform=True, n_jobs=None, voting='hard',

weights=None)

pred_train = v_clf.predict(X_train)

pred_test = v_clf.predict(X_test)

accuracy_score(y_train, pred_train), accuracy_score(y_test, pred_test)

(0.9667487684729064, 0.9581538461538461)Soft Voting

from sklearn.ensemble import VotingClassifier

v_clf = VotingClassifier(estimators, voting="soft") #voting="hard"-기본값 || "soft"

v_clf.fit(X_train, y_train)

# ==> VotingClassifier(estimators=[('knn',

KNeighborsClassifier(algorithm='auto',

leaf_size=30,

metric='minkowski',

metric_params=None,

n_jobs=None, n_neighbors=5,

p=2, weights='uniform')),

('dt',

DecisionTreeClassifier(ccp_alpha=0.0,

class_weight=None,

criterion='gini',

max_depth=5,

max_features=None,

max_leaf_nodes=None,

min_impurity_decrease=0.0,

min_impurity_split=No...

probability=True, random_state=None,

shrinking=True, tol=0.001, verbose=False)),

('lg',

LogisticRegression(C=1.0, class_weight=None,

dual=False, fit_intercept=True,

intercept_scaling=1,

l1_ratio=None, max_iter=100,

multi_class='auto',

n_jobs=None, penalty='l2',

random_state=None,

solver='lbfgs', tol=0.0001,

verbose=0,

warm_start=False))],

flatten_transform=True, n_jobs=None, voting='soft',

weights=None)

pred_train = v_clf.predict(X_train)

pred_test = v_clf.predict(X_test)

accuracy_score(y_train, pred_train), accuracy_score(y_test, pred_test)

(0.9802955665024631, 0.9686153846153847)

# Hard 보다 Soft 가 성능이 더 좋다.GridSearch를 이용한 Voting

from sklearn.model_selection import GridSearchCV

dt = DecisionTreeClassifier()

gs_dt = GridSearchCV(dt, param_grid= {'max_depth':range(1,10)}, cv=5, n_jobs=-1)

svm = SVC(probability=True)

gs_svm = GridSearchCV(svm, param_grid={'C':[0.01,0.1,0.5,1], 'gamma':[0.01,0.1,0.5,1,5]}, cv=5, n_jobs=-1)

estimators2 = [('gs_dt', gs_dt), ('gs_svm', gs_svm)]

v_clf2 = VotingClassifier(estimators2, voting='soft')

v_clf2.fit(X_train, y_train)

pred_train = v_clf2.predict(X_train)

pred_test = v_clf2.predict(X_test)

accuracy_score(y_train, pred_train), accuracy_score(y_test, pred_test)

(0.9802955665024631, 0.9686153846153847)728x90

반응형

'Data Analysis & ML > Machine Learning' 카테고리의 다른 글

| [Machine Learning][머신러닝][앙상블][부스팅] GradientBoosting (0) | 2020.09.03 |

|---|---|

| [Machine Learning][머신러닝] 최적화 / 경사하강법 (0) | 2020.09.03 |

| [Machine Learning][머신러닝] Decision Tree(결정트리)와 RandomForest(랜덤포레스트) (0) | 2020.09.02 |

| [Machine Learning][머신러닝][지도학습] SVM(Support Vector Machine) (0) | 2020.09.01 |

| [Machine Learning][머신러닝][지도학습] K-최근접 이웃(KNN) (0) | 2020.09.01 |